3D Object Detection based on Lidar and Camera Fusion

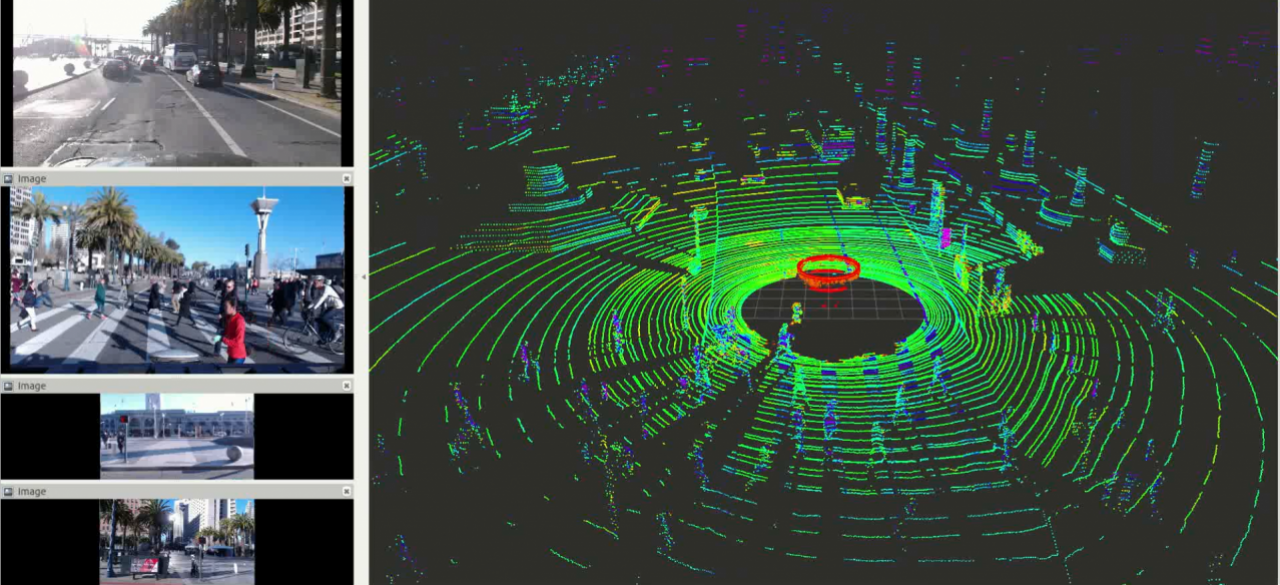

Data collected with 360-degree point cloud and corresponding images with the help from Dr. Yi-Ta Chuang, Ehsan Javanmardi and Mahdi Javanmardi.

ABOUT THE PROJECT

At a glance

2D images from cameras provide rich texture descriptions of the surrounding, while depth is hard to obtain. On the other hand, 3D point cloud from Lidar can provide accurate depth and reflection intensity, but the solution is comparatively low. Therefore, 2D images and 3D point cloud are potentially supplementary to each other to accomplish accurate and robust perception, which is a prerequisite for autonomous driving.

Currently, there are several technical challenges in Lidar-camera fusion via convolutional neural network (CNN). Unlike RGB images for cameras, there is no standard input form for CNN from Lidars. Processing is required before fusing the 3D omnidirectional point cloud with the 2D front view images. The current region proposal networks (RPN), adapted from typical image processing structures, generate proposals separately and are not suitable for learning based on Lidar-camera fusion. Lidar-camera fusion enables accurate position and orientation estimation but the level of fusion in the network matters. Few works have been done on position estimation, and all existing works focus on vehicles.

In this project, our goal is to improve 3D object detection performance in driving environment by fusing 3D point cloud with 2D images via CNN. The RPN is applied to multiple layers of the whole network so that obstacles with different sizes in the front view are considered. We will be preprocessing Lidar and camera data from the KITTI benchmark and comparing the influence of Lidar data processing schemes by examining the contribution of Lidar information in detection. We will compare the region proposal accuracy in the form of 2D or 3D bounding boxes with other stereo-vision-based and fusion-based networks. Finally, we will collect data from real world traffic, pre-process and label the collected data according to the defined input and output of the CNN.

Currently, there are several technical challenges in Lidar-camera fusion via convolutional neural network (CNN). Unlike RGB images for cameras, there is no standard input form for CNN from Lidars. Processing is required before fusing the 3D omnidirectional point cloud with the 2D front view images. The current region proposal networks (RPN), adapted from typical image processing structures, generate proposals separately and are not suitable for learning based on Lidar-camera fusion. Lidar-camera fusion enables accurate position and orientation estimation but the level of fusion in the network matters. Few works have been done on position estimation, and all existing works focus on vehicles.

In this project, our goal is to improve 3D object detection performance in driving environment by fusing 3D point cloud with 2D images via CNN. The RPN is applied to multiple layers of the whole network so that obstacles with different sizes in the front view are considered. We will be preprocessing Lidar and camera data from the KITTI benchmark and comparing the influence of Lidar data processing schemes by examining the contribution of Lidar information in detection. We will compare the region proposal accuracy in the form of 2D or 3D bounding boxes with other stereo-vision-based and fusion-based networks. Finally, we will collect data from real world traffic, pre-process and label the collected data according to the defined input and output of the CNN.

| principal investigators | researchers | themes |

|---|---|---|

| Masayoshi Tomizuka | Kiwoo Shin Zining Wang Wei Zhan | Convolutional Neural Networks |